Cluster Mode

This topic describes how to install and upgrade Release as a cluster. Running Release in a cluster mode lets you have a Highly Available (HA) Release setup. Release supports the following HA mode.

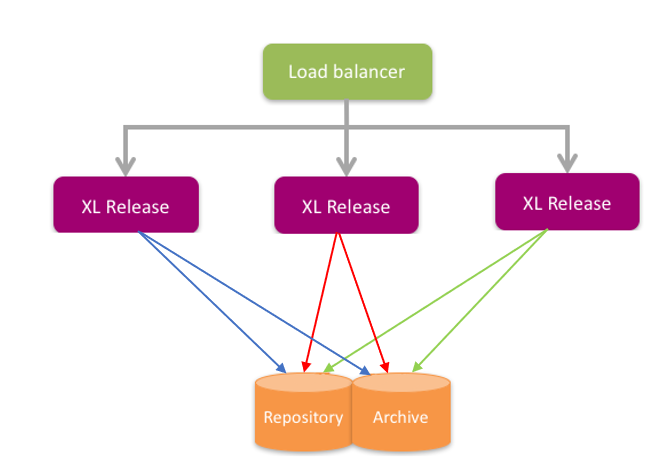

- Active/Active: Three or more Release nodes running simultaneously to process all requests. A load balancer is used to distribute requests.

Prerequisites

Using Release in cluster mode requires the following:

-

Release must be installed according to the system requirements. For more information, see requirements for installing Release.

-

The Release repository and archive must be stored in an external database, as described in configure the Release repository in a data database topic.

Cluster mode is not supported for the default configuration with an embedded database.

-

A load balancer. For more information, see the HAProxy load balancer documentation.

-

A Java Messaging System (JMS) for the Webhooks functionality. For more information, see Webhooks overview.

-

The time on both Release nodes must be synchronized through an NTP server.

-

The servers running Release must run on the same operating system.

-

Release servers and load balancers must be on the same network.

- All the Release cluster nodes must reside in the same network segment. This is required for the clustering protocol to function correctly. For optimal performance, it is also recommended that you put the database server in the same network segment to minimize network latency.

- When you are using Release in cluster mode, you must specify a shared directory to store generated reports.

You can specify the location of your shared directory in the xl-release.conf file. The parameter that stores the default location is:

xl.reporting.engine.location

The default parameter value is reports, and it holds the path of the reports directory, relative to the XL_RELEASE installation directory.

Setup Procedure

The initial cluster setup is:

- A load balancer

- A database server

- Three Release servers

Important: It is recommended to have a multi-node setup with odd number of nodes to facilitate high fault tolerance in production environments. It is also recommended not to have a cluster with more than five nodes to prevent database latency issues. You can, however, with some database configuration tuning, have a cluster with more than five nodes. Contact Digital.ai Support for more information about setting up a cluster with more than five nodes.

To set up the cluster, perform the following configuration steps before starting Release.

Step 1 - Set Up External Databases

- See Configure the Release SQL repository in a database.

- If you are upgrading Release on a new cluster, set up the database server in the new cluster, back up your database from the existing Release cluster, and restore the same on the new database server.

Both the xlrelease repository and the reporting archive must be configured in an external database.

Note: In Release, you have the PostgreSQL streaming replication set up to to create a high availability (HA) cluster configuration with one or more standby servers ready to take over operations if the primary server fails. For more information, see PostgreSQL Streaming Replication in Release.

Step 2 - Set Up the Cluster in the Release Application Configuration File

All Active/Active configuration settings are specified in the XL_RELEASE_SERVER_HOME/conf/xl-release.conf file, which uses the HOCON format.

- Enable clustering by setting

xl.cluster.modetofull(active/active). - Define ports for different types of incoming TCP connections in the

xl.cluster.nodesection:

| Parameter | Description |

|---|---|

xl.cluster.mode | Possible values: default (single node, no cluster); full (active/active). Use this property to turn on the cluster mode by setting it to full. |

xl.cluster.name | A label to identify the cluster. |

xl.cluster.node.id | Unique ID that identifies this node in the cluster. |

xl.cluster.node.hostname | IP address or host name of the machine where the node is running. Note that a loopback address such as 127.0.0.1 or localhost should not be used. |

xl.cluster.node.clusterPort | Port used for cluster-wide communications; defaults to 5531. |

xl.queue.embedded | Possible values: true or false. Set this to false if you want to use the webhooks feature. |

Sample configuration

This is an example of the xl-release.conf configuration for an active/active setup:

xl {

cluster {

mode = full

name = "xlr-cluster"

node {

clusterPort = 5531

hostname = "xlrelease-1.example.com"

id = "xlrelease-1"

}

}

database {

...

}

queue {

embedded = false

...

}

}

Note: If you are upgrading Release, you can use the existing Release cluster's

xl-release.conffile. Copy the existingxl-release.conffile to the new server and update the file with any changes to the cluster name (xl.cluster.name) hostname (xl.cluster.node.hostname), and so on.

Important: If you want to use the webhooks feature in a High Availability (cluster mode) setup, the JMS queue cannot be embedded. It must be external and shared by all nodes in the Release cluster.

Step 3 - Set Up the First Node

-

Open a command prompt and run the following server set up command:

Fresh Installation

./bin/run.sh -setupUpgrade

./run.sh -setup -previous-installation `XL_RELEASE_SERVER_HOME_EXISTING` -

Follow the on-screen instructions.

Step 4 - Prepare Another Node in the Cluster

- Zip the contents of the

XL_RELEASE_SERVER_HOME/folder from the first node. - Copy the ZIP file to another node and unzip it.

- Edit the

xl.cluster.nodesection of theXL_RELEASE_SERVER_HOME/conf/xl-release.conffile. - Update the values for the specific node.

You do not need to run the server setup command on each node.

Step 5 - Set Up the Load Balancer

When running in cluster mode, you must configure a load balancer to route the requests to the available servers.

The load balancer checks the /ha/health endpoint with a HEAD or GET request to verify that the node is up. This endpoint will return:

- A

200 OKHTTP status code if it is the currently active node

This is a sample haproxy.cfg configuration for HAProxy. Ensure that your configuration is hardened before using it in a production environment.

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

log-send-hostname

maxconn 4096

pidfile /var/run/haproxy.pid

user haproxy

group haproxy

daemon

stats socket /var/run/haproxy.stats level admin

ssl-default-bind-options no-sslv3

ssl-default-bind-ciphers ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES128-SHA:DHE-RSA-AES128-SHA:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:AES128-GCM-SHA256:AES128-SHA256:AES128-SHA:AES256-GCM-SHA384:AES256-SHA256:AES256-SHA:DHE-DSS-AES128-SHA

defaults

balance roundrobin

log global

mode http

option redispatch

option httplog

option dontlognull

option forwardfor

timeout connect 5000

timeout client 50000

timeout server 50000

listen stats

bind :1936

mode http

stats enable

timeout connect 10s

timeout client 1m

timeout server 1m

stats hide-version

stats realm Haproxy\ Statistics

stats uri /

stats auth stats:stats

frontend default_port_80

bind :80

reqadd X-Forwarded-Proto:\ http

maxconn 4096

default_backend default_service

backend default_service

cookie JSESSIONID prefix

option httpchk HEAD /ha/health HTTP/1.0

server node_1 node_1:5516 cookie node_1 check inter 2000 rise 2 fall 3

server node_2 node_2:5516 cookie node_2 check inter 2000 rise 2 fall 3

Previous versions of Release included a sample haproxy.cfg configuration for HAProxy that had the following line:

option httpchk head /ha/health HTTP/1.0

Starting with version 9.5, the head value is case-sensitive and must be expressed as HEAD.

Limitation on HTTP Session Sharing and Resiliency in Cluster Setups

Release does not share HTTP sessions among nodes. If the active Release node becomes unavailable:

- All users will effectively be logged out and will lose any data that was not stored to the database.

- Any script tasks that were running on the previously active node will have the

failedstatus. When a new node becomes the active node, which happens automatically, you can restartfailedtasks.

Performing a TCP check or GET operation on / will indicate that a node is running.

Step 6 - Start the Nodes

Beginning with the first node that you configured, start Release on each node. Ensure that each node is fully up and running before starting the next one.

Advanced Configuration

Network Split Resolution

In the case of a network split, the Release cluster has a default strategy configured to avoid the creation of multiple independent cluster partitions from the original cluster.

The default configured strategy is the MajorityLeaderAutoDowningProvider.

This auto-downing strategy shuts down every cluster partition which is in minority. For example: partition size < cluster size / 2).

When the cluster is split into two parts, partition size == cluster size / 2, the partition containing the oldest active cluster member will survive. If there are no partitions containing the sufficient number of members, the quorum cannot be achieved and the whole cluster will be shutdown. If this occurs, an external restart of the cluster is required.

An alternative strategy, available by default, is the OldestLeaderAutoDowningProvider. This strategy can be activated in the XL_RELEASE_SERVER_HOME/conf/xl-release.conf file by specifying:

xl {

cluster {

akka {

cluster {

downing-provider-class = "com.xebialabs.xlplatform.cluster.full.downing.OldestLeaderAutoDowningProvider"

}

}

}

...

}

This strategy will keep the partition with the oldest active node alive. It is suitable for a Release cluster which needs to stay up as long as possible, without depending on the number of members in the partitions.