AI-Powered Error Classification

This feature is currently available for managed Private Cloud

Overview

AI-Powered Error Classification automatically analyzes failed mobile and web tests and assigns each failure to a meaningful error category. This helps identify the underlying cause of a failure, whether it is a Device issue, Network instability, or App crash—and groups similar failures together.

Once errors are classified, QA teams can quickly understand patterns, recurring root causes, and the distribution of issues across their test runs.

This feature works seamlessly across supported frameworks in mobile and web testing (Appium, Espresso, XCUITest, Selenium, etc.).

Where Error Classification Is Useful

Modern automation produces massive amounts of test results. In teams running hundreds or thousands of tests per day, manually inspecting each test failure is time-consuming and inefficient. Failures often repeat across builds, devices, or environments—making it difficult to see the true impact of systemic problems.

Error Classification solves these challenges by:

- Reducing triage time by automatically identifying root-cause categories

- Highlighting patterns that are otherwise lost in raw logs

- Differentiating real product defects from noise, such as infrastructure instability

- Helping teams prioritize work (e.g., resolving network issues before debugging test scripts)

- Improving release decision-making through clear visual summaries

Instead of digging through logs, teams can immediately see why tests failed and focus on what matters most.

How it works

Whenever a test run ends with a failure, the system automatically:

- Extracts the relevant error strings and logs

- Processes them through the Error Classification model

- Assigns a category (or marks as "Unclassified" if no confident match exists)

This step is automatic.

Navigating to Error Categories

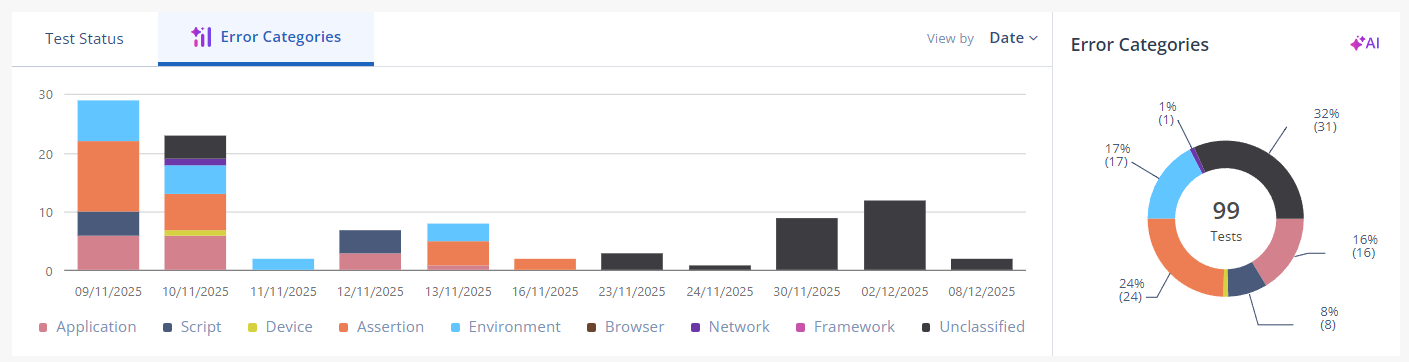

On the Tests page, click on the Error Categories tab to access visual analytics.

Here you will see:

- A stacked bar chart of failures over time grouped by category

- A donut chart showing percentage distribution

- The total number of tests included in the selected period

This visualization gives an instant snapshot of failure types across any chosen timeframe. Just like regular test analytics, users can apply filter sets and switch the granularity using View by: Date / Device / OS / App Version / Framework

This provides deep flexibility to compare trends and isolate root causes, such as:

- Which OS version causes the most Application crashes

- Whether a new device model introduces Script instability

- Network failure patterns across test labs

All filtering logic behaves exactly like other analytics screens users are already familiar with.

Error Categories

Below is the full list of error categories currently supported by the classifier, presented in a compact table.

| Category | Description |

|---|---|

| Application | Issues caused by the app under test itself — crashes, ANRs, unhandled exceptions, or the app failing to launch. |

| Script | Problems in the automation script — incorrect selectors, timing issues, or incorrect flow assumptions. |

| Device | Failures triggered by the device — disconnects, low storage, unresponsiveness, or screen lock. |

| Assertion | Failed validations — expected text/value missing, UI state mismatches, or explicit assertion failures. |

| Environment | Instability in the lab — cloud session interruptions or host machine issues. |

| Browser | Browser-related issues for mobile web/hybrid apps — browser not opening, tab crashes, WebView rendering errors. |

| Network | Connectivity failures — timeouts, DNS issues, backend unavailability, or request errors. |

| Framework | Failures originating from the underlying test framework — driver initialization errors or framework-level exceptions. |

| Unclassified | Errors that cannot be confidently categorized — rare, mixed, or incomplete failure signatures. |

Summary

Error Classification transforms raw, noisy automation failures into clear, meaningful insights. With automated categorization, powerful visualizations, and seamless integration into the Tests page, teams get a faster, clearer understanding of what's breaking—and why.

This accelerates triage, improves reliability, and strengthens every release cycle.